The intersection of music and technology has always been a fertile ground for revolutionary changes, culminating in some of today's most intriguing innovations where robots are now capable of playing musical instruments. In this domain, engineers and programmers have successfully created machines that not only understand the mechanics of various instruments but can also perform with them, thereby expanding the traditional boundaries of music production and performance.

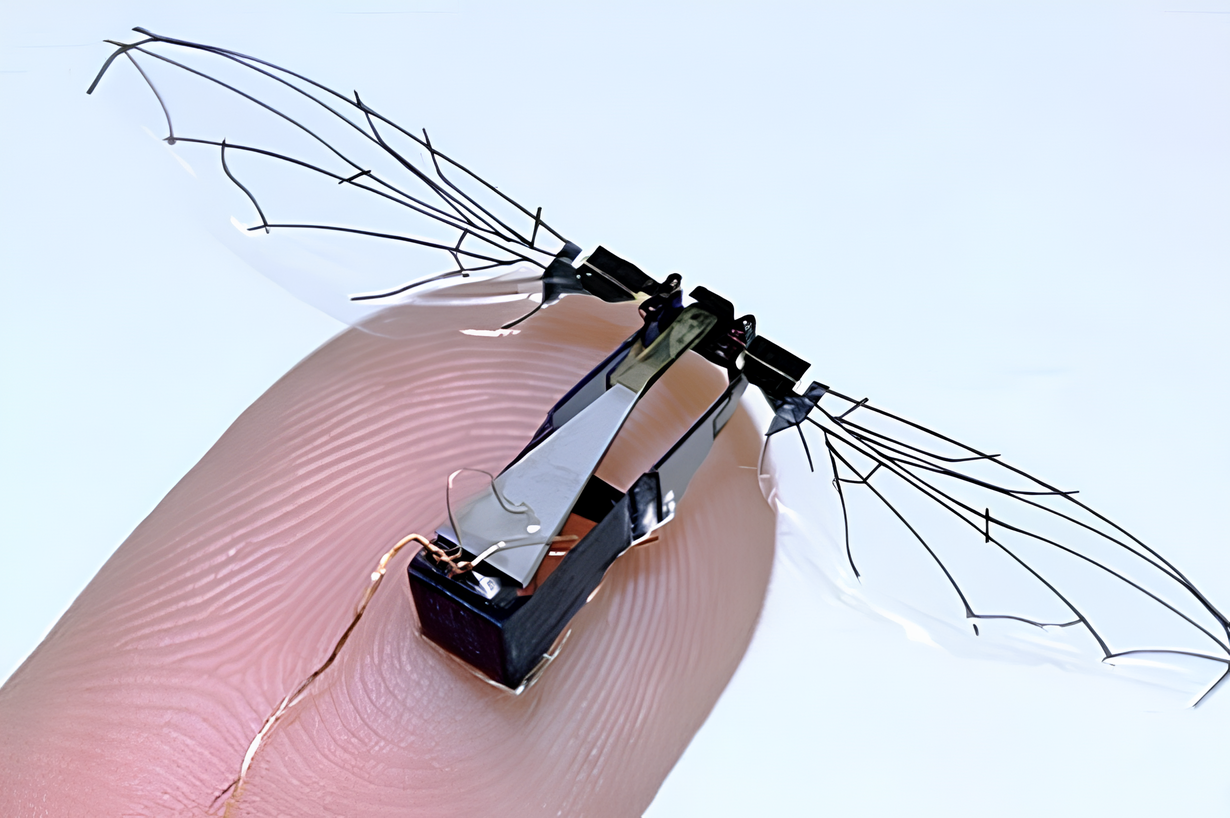

These robotic musicians come in various forms, some designed to play stringed instruments, while others manage percussive beats or even wind instruments. Their abilities range from simple note playback to dynamic performances that mimic the expressive variability of human musicians. The various applications of such technologies promise to make playing instruments more accessible and open up new possibilities for compositions.

The advent of robots capable of playing musical instruments marks a fascinating evolution in the creative partnership between humans and machines. As I explore the implications and achievements in this field, it's clear that the blend of music and robotic precision has the potential not just to mimic but also to enhance and push the musical experience into new, unprecedented territories.

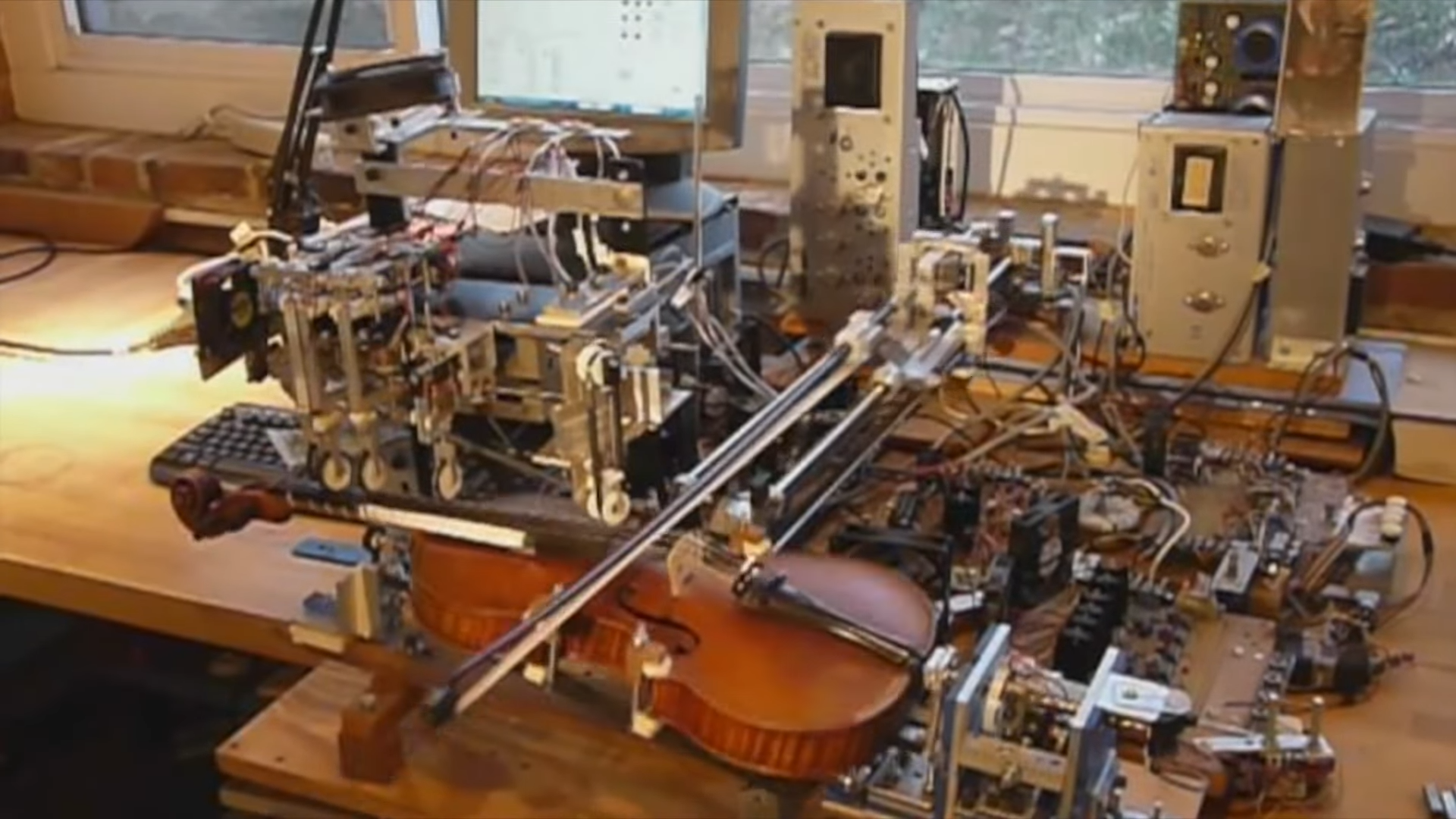

The beginnings of what now is in a full display trajectory of change, started with very humble means and in times that you wouldn't even guess if you weren't a robotic geek already. Aren't you?!

Evolution of Musical Robots

Throughout history, the fusion of music and technology has led to the creation of musical robots, signaling a new era in both entertainment and engineering. Here, I'll detail how musical robots have developed from simple automated instruments to complex artificial intelligence-driven musicians.

Historical Development

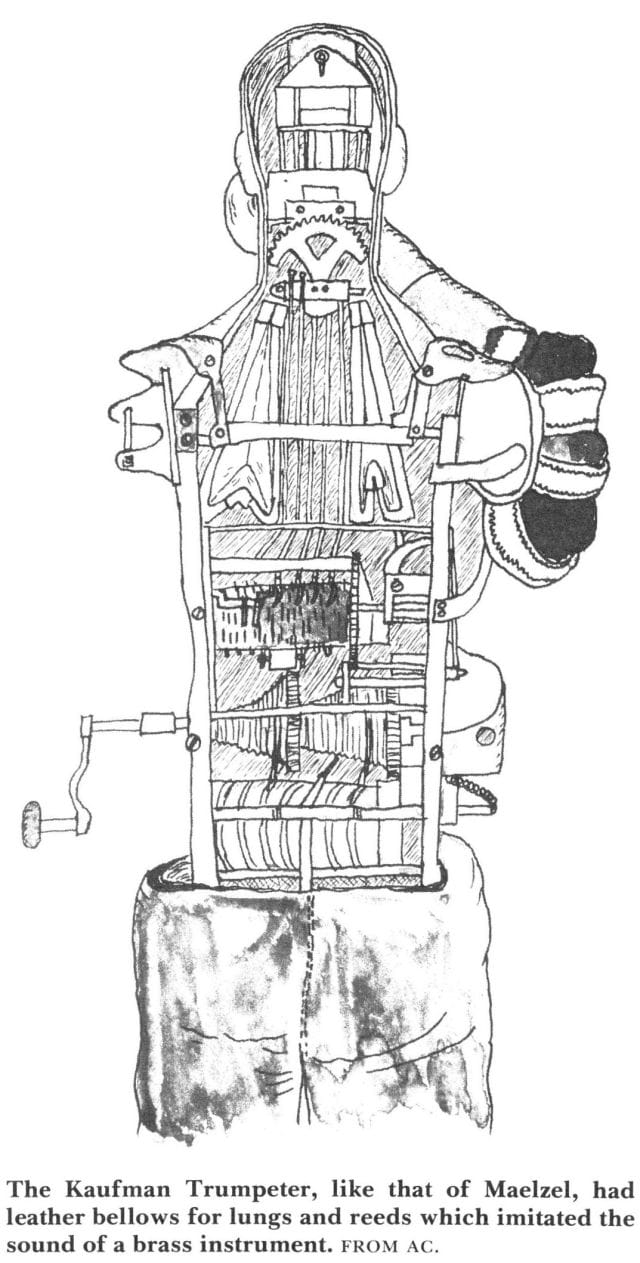

Historically, automation in musical instruments can be traced back to music boxes, where simple machinery triggered pre-set tunes. This form of mechanical music saw advancements with the introduction of player pianos, which used pneumatic or electro-mechanical mechanisms to play predetermined music without human intervention.

The effort of making robots playing piano has been going on for quite some time, as matter of fact the number of prototype to figure out the phalangies and tempo mechanics is by far higher than any other instruments.

In the realm of robotics, early automatons were the precursors to today's robotic musicians. These fascinating inventions were often built to resemble humans or animals, performing basic motions while producing music. Although simplistic by modern standards, they were crucial stepping stones that showcased the potential synergy between robots and music.

Modern Advances in Robotic Musicians

Today's robotic musicians have taken a quantum leap in technology and robotics. Engineers and computer scientists have equipped modern musical robots with artificial intelligence (AI) and sophisticated computer software, enabling them to learn, adapt, and interact with human musicians. For instance, Shimon, developed by Gil Weinberg, is a marimba-playing robot that can improvise in response to the music it hears.

Bands like Compressorhead take it a step further, consisting entirely of robots playing instruments like the guitar, drums, and bass, performing with precision that is humanly impossible. Beyond entertainment, these robotic musicians potentially redefine the boundaries of music and creativity, setting new pathways for the integration of robots in art and culture.

The cutting-edge humanoid robots, capable of emulating human gestures and interactions, bring an additional layer of showmanship to performances, engaging audiences much like live musicians would. Automated instruments like these highlight the harmony between science and art, an interplay that continues to evolve with each technological breakthrough.

Robot-Human Collaboration

I find it fascinating that advancements in robotics and artificial intelligence are not only transforming traditional industrial and technological roles but are also making their mark in the creative realm. Specifically, there is an increasing presence of robots capable of playing musical instruments in collaboration with humans, which showcases a remarkable blend of technology and artistry.

Human-Robot Interactive Performances

Robots such as Shimon, developed by Gil Weinberg, have been designed to interact with humans in musical jam sessions. Shimon is not merely a robot; it is a humanoid robot with the ability to play the marimba and engage in improvisational jazz performances. Not only does it respond to the musical cues of human artists, but it also contributes creatively, suggesting an evolving role of artificial intelligence in music production. The robot's intricate functions enable it to perceive nuances in music, making it capable of both solo performances and harmoniously collaborating with human musicians.

Educational and Creative Aspects

In the field of music education and creative experimentation, the fusion of human and robotics opens up new avenues. For instance, these robotic systems can assist in composing music and helping artists, engineers, and scientists to understand better the intersection between artificial intelligence and human creativity. Through this collaboration, a programmer can teach the robot to play traditional instruments like keyboard, string, or wind instruments while learning about the intricacies of each through the robot's learning algorithms. This knowledge exchange highlights the educational potential of such human-robot collaboration in arts and technology.

Technological Integration and Instrument Adaptation

In the realm of music, I've witnessed a fascinating evolution with the introduction of robots that are capable of playing instruments. This development represents a harmonious convergence between technological prowess and artistic expression.

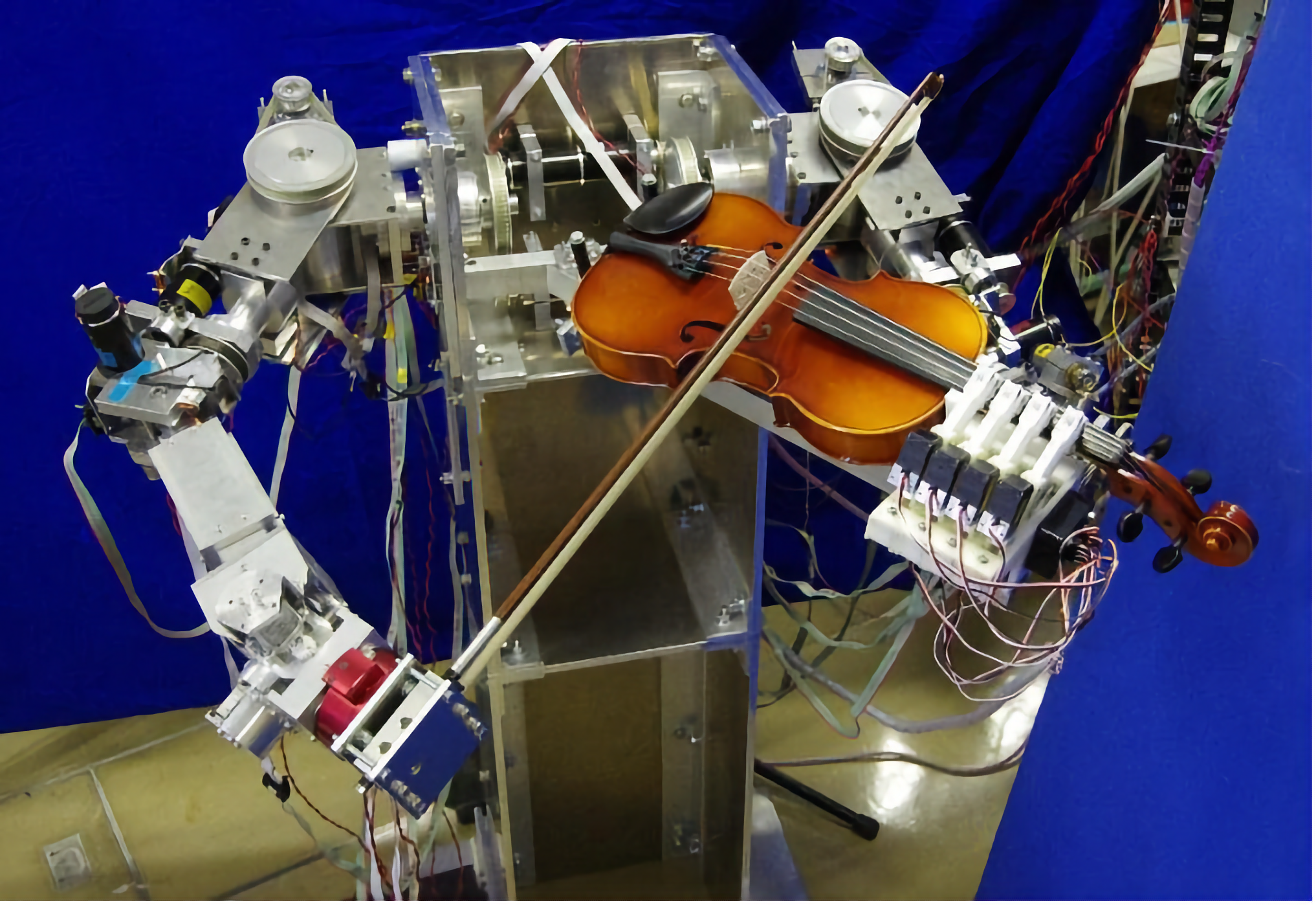

Specialized Instrument Hardware

One of my key observations in this field involves specialized hardware, which is central to the adaptation of traditional instruments for robotic use. For example, robotic arms equipped with precision mechanics enable the playing of various instruments such as pianos and guitars, mimicking the intricate movements required. The hardware often includes advanced sensor technology, which allows robots to interact with instruments like a synth, organ, or drums in a dynamic and responsive manner. In the case of a piano, actuators substitute for human fingers, pressing keys with surprising nuance. The material design of such robotic arms must be thought out by a skilled electrical engineer, ensuring that they can endure the physical demands of performance.

Programming and Control Systems

The soul of these musical robots lies within their programming and control systems. I've examined systems that integrate MIDI (Musical Instrument Digital Interface) commands to direct robots, enabling them to follow complex sequences and even improvise in real-time. This aspect marries computer algorithms with physical machinery, resulting in a seamless flow from digital instruction to analog sound. Automation software is critical here to ensure the robots can not only play preprogrammed pieces but also adapt to changes in tempo, dynamics, and style. For instruments like turntables, this system must handle and interpret a wide range of inputs to manipulate sound accurately.

Impact on Music and Culture

As robotics intertwine with music, they carve out a niche in both the production of sound and the cultural perception of music. Influences range from changes in genres to societal reception, defining an era where technology and traditional art forms merge.

Spotify has not banned AI-generated music. In September 2023, Spotify CEO Daniel Ek confirmed that Spotify does not plan to ban AI-generated music. Ek said that AI tools that auto-tune music are acceptable, but tools that mimic artists are not. In May 2023, Spotify removed tens of thousands of songs from Boomy, an AI music start-up. Universal Music Group flagged Boomy for allegedly using bots to boost its streaming numbers. Spotify removed 7% of songs created with Boomy, which is equivalent to "tens of thousands". In April 2023, Spotify removed a track featuring AI-cloned voices of Drake and The Weeknd. The track, "Heart On My Sleeve", garnered millions of streams after its release. Spotify also has an AI DJ feature. To find it, open the Spotify app and go to Home > Music. Then, tap the large blue DJ card and tap the Play button. Spotify also has an AI Playlist Art feature. To use it, open the search icon in the app, tap the AI Playlist Art panel, and then press Create Art

Influence on Music Genres and Styles

Robotic musicians have made tangible changes to the soundscapes of various music genres. For example, certain robotic systems are designed to play acoustic instruments, pushing the boundaries of jazz by creating sounds and rhythms that might challenge even the most skilled jazz musician. Moreover, the use of robotics in music production allows for the precise replication and transformation of sounds that are physically demanding or impossible for human artists to achieve. This opens doors for new music styles that integrate electronic and acoustic elements in ways not previously possible.

Robotic involvement in music production can vary from playing traditional instruments to generating entirely new ones through cymatics, the study of visible sound and vibration. The possibility of visualizing sound through cymatics not only influences the auditory senses but also has the potential to add a visual layer to musical performances, creating a multidimensional experience for audiences.

Perception and Reception by Society

My observation of society's reception of robotics in music uncovers a range of emotions, from fascination to skepticism. Robots taking center stage in musical performances not only shifts the landscape of live entertainment but also challenges our traditional views of creativity and artistry. Some might view these mechanical performers as 'mad scientists' creations, akin to something out of a Star Wars saga, while others regard them as the next step in the evolution of art and culture.

In media representations, robotic musicians are sometimes characterized by a sense of novelty. They can be seen as an extension of the human body and mind, exploring new realms of creative expression. Yet, within the art community, acceptance is cautious as artists and listeners grapple with the implications of technology-driven music, questioning the emotional depth that robots might convey in their performances.

This intersection of robotic innovation and music blurs the lines between technology and human emotion, between what's considered traditional culture and what's labeled as avant-garde artistry. From album releases to live concerts, the integration of robots is transforming not just the sounds we hear, but also the fundamental relationship between creator, performer, and audience in the musical landscape.

I have tried to learn how to playing guitar for two decades, I have more guitars that I don't know how to use than actual results to show off. So I am patiently waiting for a robot that can playing on my request whatever floats my dopamines rush. Until then... Spotify is my jam!